Tim posing with a recurrent neural network

Tim Cooijmans

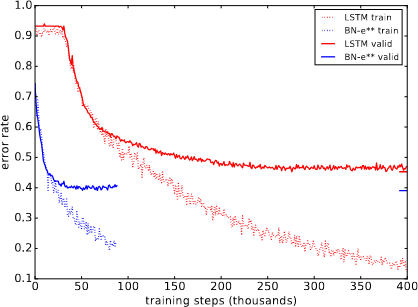

I am an ML&AI researcher interested in the dynamics of learning systems, such as agents updating their beliefs, or their parameters using gradient descent. My focus is on stability of learning, by which I mean that the agent maintains its ability to pursue its goals. With Recurrent Batch Normalization, I stabilized the hidden state process of recurrent systems, dramatically improving their training and generalization.

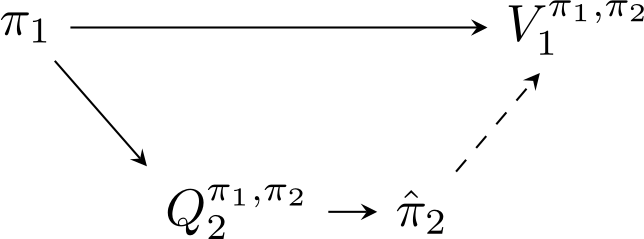

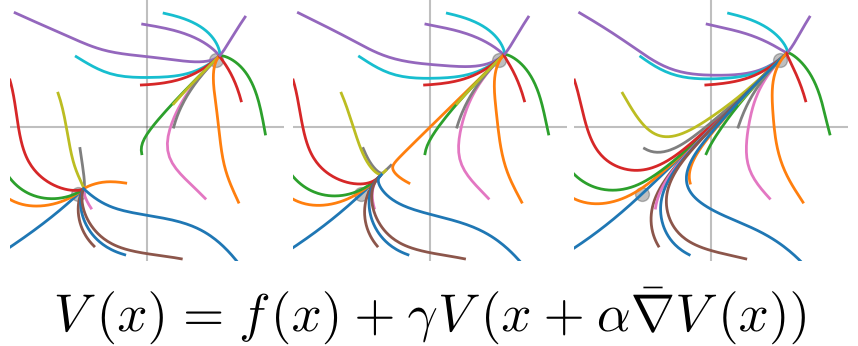

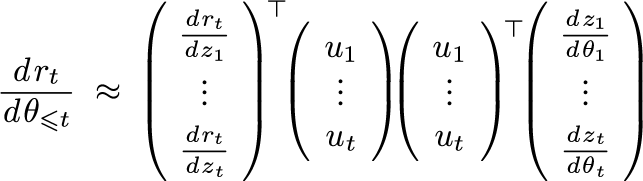

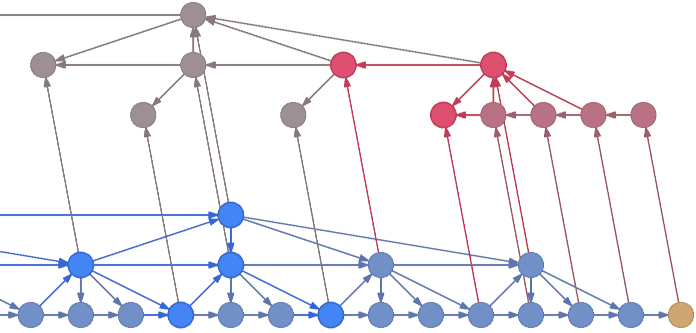

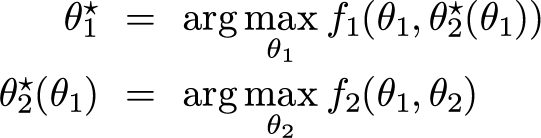

I am currently working in the area of multi-agent reinforcement learning (MARL), which exaggerates some of the difficulties of single-agent (and supervised) learning, and introduces several new ones. In my view, the failure of gradient descent on MARL problems is fundamental, and its study will lead to new perspectives and opportunities on learning in general. In Meta-Value Learning, I propose an algorithm that generates a surrogate (the meta-value) on which gradient descent is stable, and, I suspect, finds global (Pareto) optima in principle.

Publications

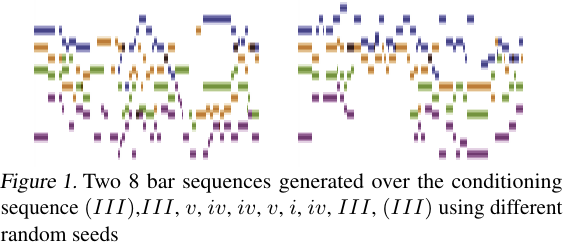

Coordination in Generative Modeling, Automatic Differentiation and Multi-Agent Learning

PhD dissertation, 2024.

Meta-Value Learning: solving social dilemmas with a novel general meta-learning framework.

ICML 2023 Frontiers workshop.

Best-Response Shaping: solving social dilemmas by differentiating through the best response.

RLC 2024.

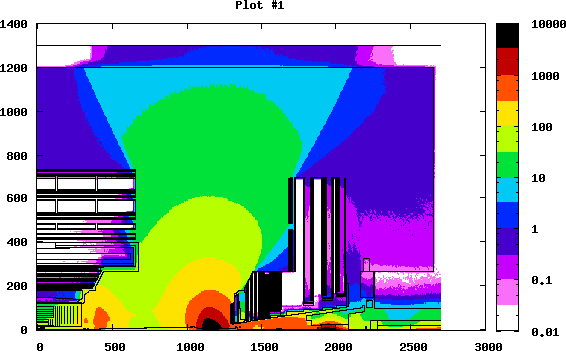

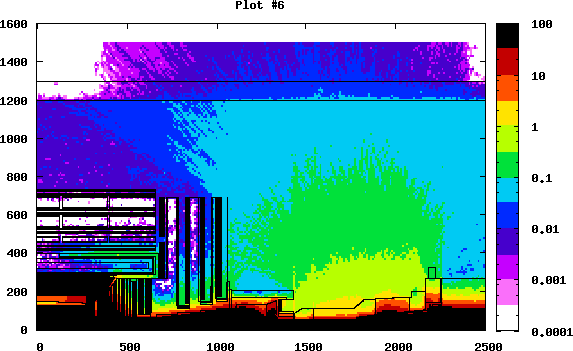

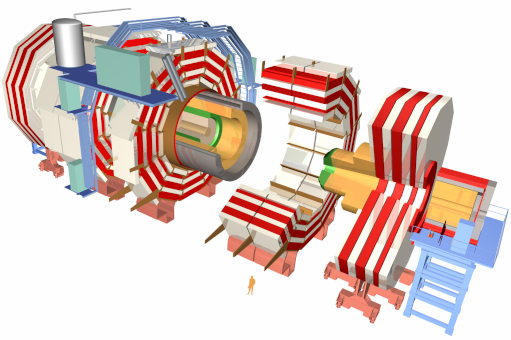

Monte-Carlo simulations of the radiation environment for the CMS experiment.

Elba2015 13th Pisa Meeting on Advanced Detectors.